What we really need is outside of the algorithm

When your worldview gets darker the longer you scroll on ig or tiktok, accept this invitation to rescue your attention from algorithm-curated spaces and return it to human curation, moderation, and action.

When I say, "these spaces", "the platform" and "here" in this audio and lightly-edited transcript, I am referring to the social media apps where this was first posted.

Why does it feel like the longer that you and I are scrolling in these spaces, the less confident we feel in ourselves, in humankind, and in the fact whether anything we do means anything at all?

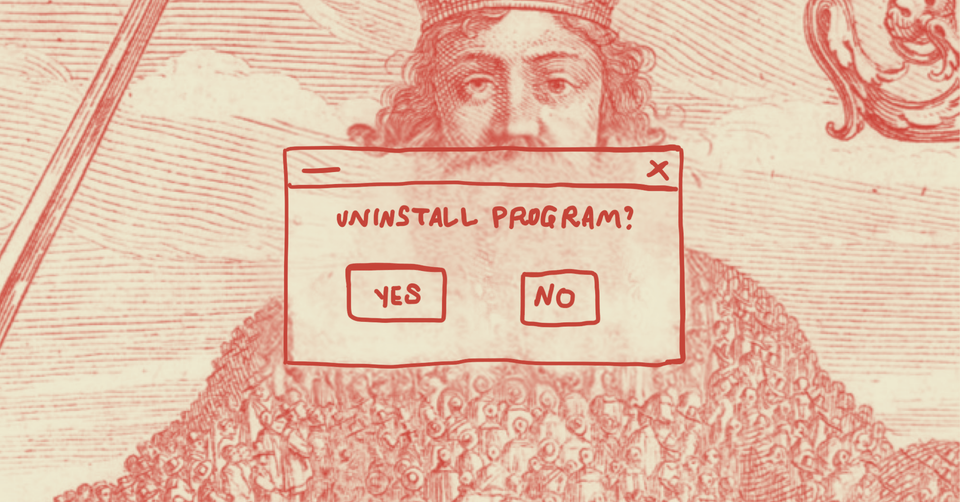

Algorithms aren't designed to give us things in healthy doses. Algorithms don't moderate well and they don't teach us how to moderate ourselves better either. This is why when we scroll, we just have no idea what we're going to see next. It could be genocide content, it could be kitten content, it could be someone like me talking about scrolling itself. Each thing has been separated from its original space and time, and then shuffled into a big deck and then given back to you at random, just so that ads have something to hold on to.

Without that spatial or temporal context, even the most important information— and there's lots of important information in these spaces— even the most important information can leave us feeling pretty dull, pretty numb, pretty doomery for lack of a better word. It makes us feel rage that has nowhere to go.

Our natural and healthy desire to build the world we want is redirected and rerouted back into the platform.

So it's good to remember that when an algorithm recommends you something, it's not because they saw it and cared about you, thought about you, and really wanted you to see it and then maybe we can hang out and talk about it later, no! Algorithms don't have opinions. They don't have tastes.

Do I learn new things [on Instagram, TikTok, Twitter]? Yes, all the time. It's why I'm still here. But even that natural and healthy pleasure of learning something new, maybe even feeling like morally on the right side of history, is something that algorithms and the attention economy can and will hack. I think there's something healthy about rage. Rage indicates to us that something's wrong and needs to be fixed and something needs to change and maybe we can change that. But since the attention economy is about capturing engagement, the platforms we are on right now will say: "ok here is a horrific and disgusting event, now let's DO something about it— like share it, post something, keep scrolling maybe?"

What happens is that our natural and healthy desire to build the world we want is redirected and rerouted back into the platform. And then it's diffused, it's scattered. That does something to our attention span too right, even off-platform, the way we consume things can also end up being kind of diffused and scattered. If you're brain-rotten like me, you know what it's like to have that urge to scroll on my phone while I am watching something else.

Because algorithms are not going to give us what we need, we have to translate what we digest on social platforms into a commitment or action outside of that platform.

We have to accept that algorithms and "AI" are not going to give us what we need. Yes these things can be useful, but we have to accept that what we need is most likely off [Instagram, TikTok, Twitter]. I'm not saying delete your account— and some of us do come and go— more so the idea that when we take in what we do in these spaces, it has to translate into a commitment / action that takes place OUTSIDE of that platform.

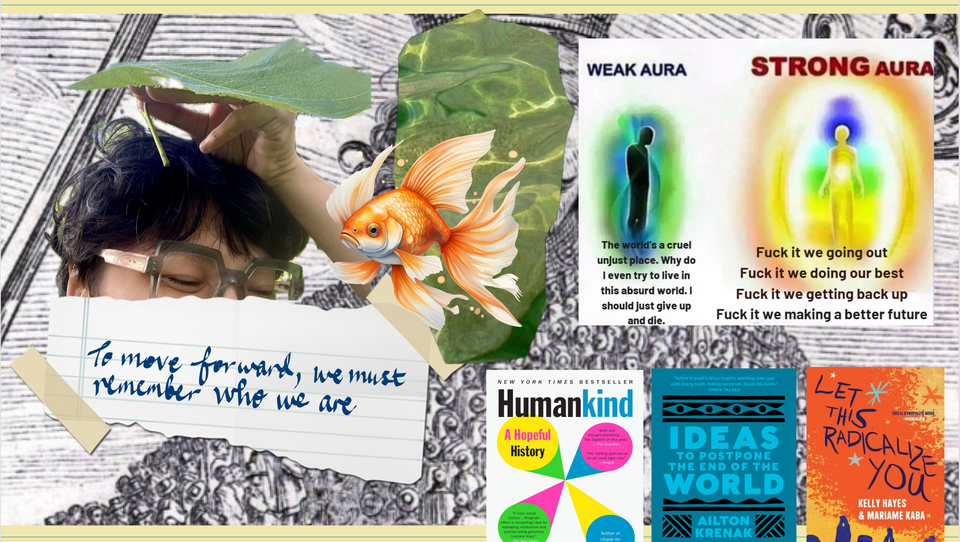

I can give two examples, one being the rise (revival) of slow web and the rise of newsletters off of these social platforms, where you don't get whiplash from looking at something and digesting something that a human being has made for us to take in, and not to try to sell us something. I'm not talking about people who are trying to sell us something. I'm talking about human-curated playlists— whether it's playlists of links and music or whatever— a gift that someone else has made thoughtfully, with the idea that 'if I leave this here, someone can come and take it if they want, if it resonates with them.' That is more real and possibly more what we need (for our attention span at least) than any other thing that an algorithmic curated playlist could give me.

Another example I'll give is that one year on from October 2023, I haven't had McDonald's, and I don't think I am going to ever have McDonald's ever again. It's a tangible action (that reflects my shift in thinking) and a commitment. It may seem small, but it's something I wasn't doing a year ago, and something I didn't even know much about before. And now I've learned better and know better, I'm never going to be the same again. And this is how I am going to demonstrate that. That could look like so many other things for other people depending on where you are— all the way up to maybe the level of sabotage even.

A deeper attention span is going to be incredibly useful for times to come.

Fixing or practicing our attention span— a deeper attention span— is going to be incredibly useful for times to come. Even if we live in a hypothetical world where everyone agrees on everything and is correct about everything all the time, we are still going to be limited by our attention and our time. These two things are expensive. And to practice your attention span, to focus your undivided attention on something you know is important, or even just meditation, which is the free action of "doing nothing" at all for a certain amount of time... focusing on just one thing and not taking attention away from that, that practice is going to be so useful in times to come. And it definitely will help with that feeling that I talked about before.

When your world starts feeling as black-and-white as the world of the algorithm, come back to human moderation, curation, and action.

So when your world starts to feel black-and-white as the world of the algorithm, where the longer you're in algorithm-moderated space, the more you feel like we're all doomed, nothing's going to change, and everything's hopeless, take that as a sign that this space— this way of living in the algorithm— isn't giving you what you need right now, and that you need to learn to moderate for yourself in spaces that are algorithm-free.

Come back to human moderation. Come back to human curation.

Come back to doing things with the people you love (or won't leave behind) and seeing what other people have left out there for you on the big wide world of the internet that is about the thing you want to learn more about. It's all there for you and maybe more interesting rabbitholes you can go down that won't take your attention span in 20 different directions.

/end of transcript

Resource channel: attention outside of the algorithm

Watch the essay that very much inspired this rant

This video essay from chriswaves was really reaffirming to me re: the value of human curation and moderation for the sake of itself instead of for 'profit.' He also cites Jenny Odell's How To Do Nothing, a book on the attention economy that a friend invited me to read with her in 2019 and that I still think about today.

I've been making playlists for friends and others from the moment I learned how to 'burn' a CD-R, and in mid-2023 I started making curated lists for people to stumble upon and find something in it I find interesting/useful too. I still update them all the time:

Resource channel

I also made a resource channel for this idea that you can see here: